Update: Looking for NFS instead of iSCSI? Check out this post: How to set up VMware ESXi, a Synology NFS NAS, and Failover Storage Networking

This week, I’ve been working on a lightweight virtualization infrastructure for a customer and I thought you’d like to see a little of how I put it together. The customer wasn’t really interested in paying for a full SAN solution that would include chassis redundancy and high performance. They opted instead for a 12-bay Synology RS2414 RP+, a couple of HP servers for ESXi hosts, and a Cisco 2960 Layer 2 Gigabit storage switch all tied together with VMware vSphere Essentials Plus.

While not exactly a powerhouse in terms of speed and reliability, this entry-level virtualization platform should serve to introduce them into the world of virtual servers, drastically reduce rackspace and power consumption, provide the flexibility they need to recover quickly from server hardware outages, and allow them to more easily migrate off of their aging server hardware and operating systems all without breaking the bank. Today, I’m going to show you how I set up Active/Active MPIO using redundant links on both the ESXi hosts and the Synology NAS, allowing for multipath failover and full utilization of all network links.

First, a little bit about the hardware used. The Synology RS2414RP+ is running Synology’s DSM version 5 operating system and carries 4 x 1GB ethernet ports and 12 drive bays containing 3 x 3TB drives (9TB raw). This model also supports all four VAAI primitives, which will be important when we make decisions about how to create our LUNs. This support will allow us to offload certain storage-specific tasks to the NAS, which dramatically reduces network and compute workloads on the hosts.

The hosts are regular HP DL380 2U servers with 40GB of RAM and carrying 4 x 1GB ethernet ports. Because they only have four onboard 1GB ports, we lose a lot of speed and redundancy options on the networking side, but since this customer is only going to have a handful of lightly-to-moderately used VM guests, they shouldn’t experience any deal-killer performance problems. In any case, they’ll experience much better response times than what they are currently seeing on their old gear. In another post, I’ll run some IOMETER tests to show actual IOPs to get an idea of expected performance.

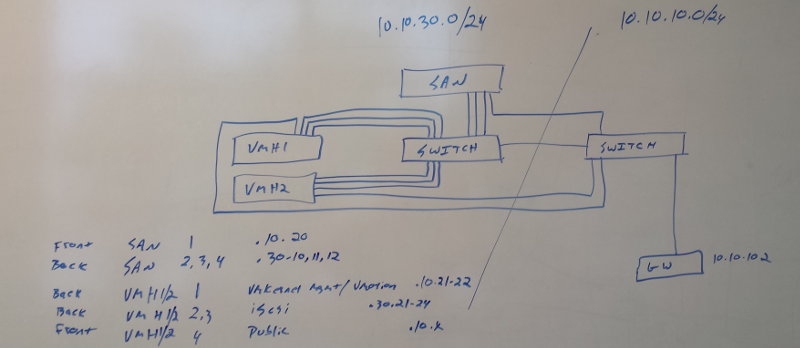

Here is my very quick-and-dirty whiteboard layout:

Hosts:

1 port for Management/VMotion

2 ports for iSCSI storage

1 port for Public network access to VM guests

NAS:

1 port for Public network management access

3 ports for iSCSI storage

The iSCSI storage and the VMotion ports are all on a dedicated back-end network switch. The Public ports will go to existing premise switches. Normally, I don’t like to double-up Management and VMotion on one port, but with only 4 ethernet ports on the hosts, we’re a bit limited in our options. Since this is such a small environment, there won’t be a lot of guest VMotioning happening anyway.

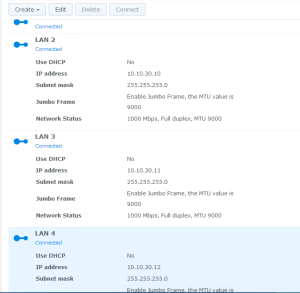

The first thing that we have to do is to set up the networking on our Synology. In this example, I used LAN ports 2, 3, and 4 for the iSCSI storage, giving them each a static IP address on the storage network. Port 1 has a static IP on the Management network. You’ll notice that I’ve already enabled jumbo frames and set them to MTU 9000.

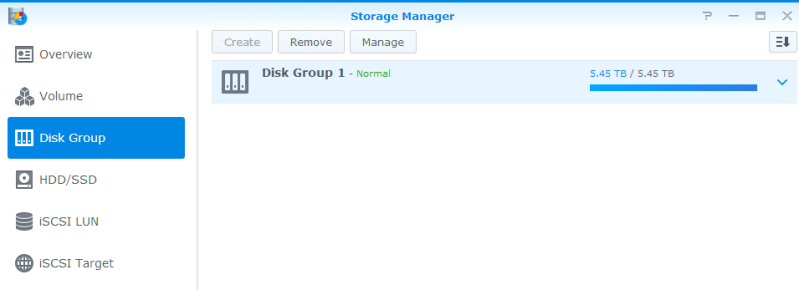

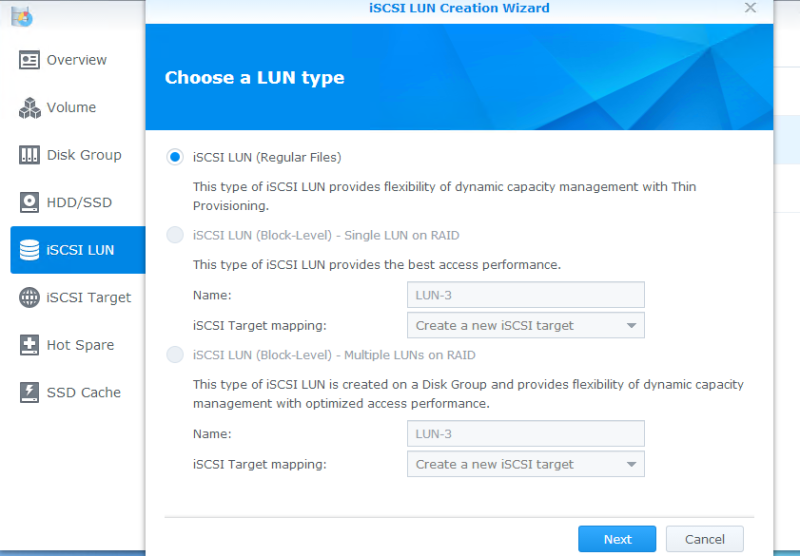

Now we’re going to create our LUNs. Synology doesn’t support VAAI 100% across the board on their hardware. In order to have full support, you have to create your LUNs as “iSCSI LUN (Regular Files)” rather than as block storage. You’ll see this in a minute. To get ready to do that, we’ll first create a Disk Group. Open the Storage Manager on the DSM. In this example, you’ll see that I’ve created one already and I opted to give it the full size of the three-drive array:

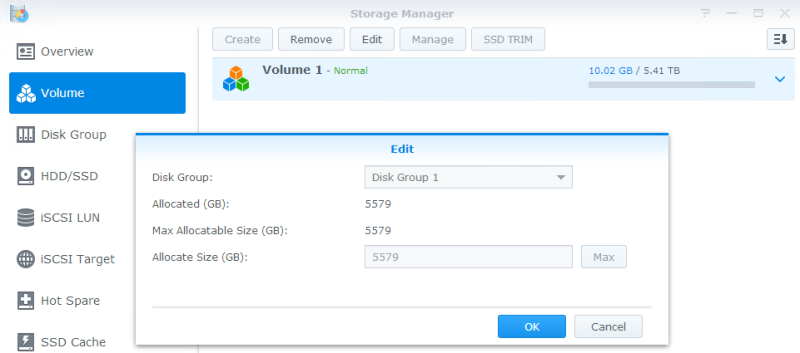

Next, create a Volume on the Disk Group. Again, you’ll see I’ve already created a Volume and I again opted to fill the Disk Group created previously.

Now, we’ll create our first LUN. Click on iSCSI LUN, and then click Create. You’ll notice that only the top option is available. That’s due to the way we created the Disk Group and Volume. The two previous steps are only necessary if you wish to use VAAI. Your iSCSI will still work with the Block-Level storage, you just won’t have hardware acceleration support in ESXi.

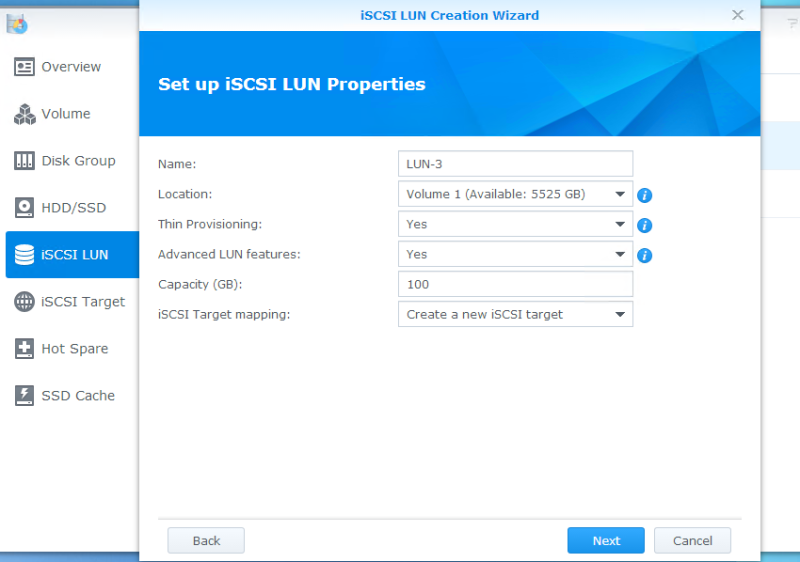

Click next and choose the options for your LUN. The critical step here is to ensure that you set “Advanced LUN features” to YES. I’ve also heard that Thin Provisioning must be enabled as well, but I was not able to replicate it. If you haven’t already created an iSCSI target, go ahead and do it in this wizard.

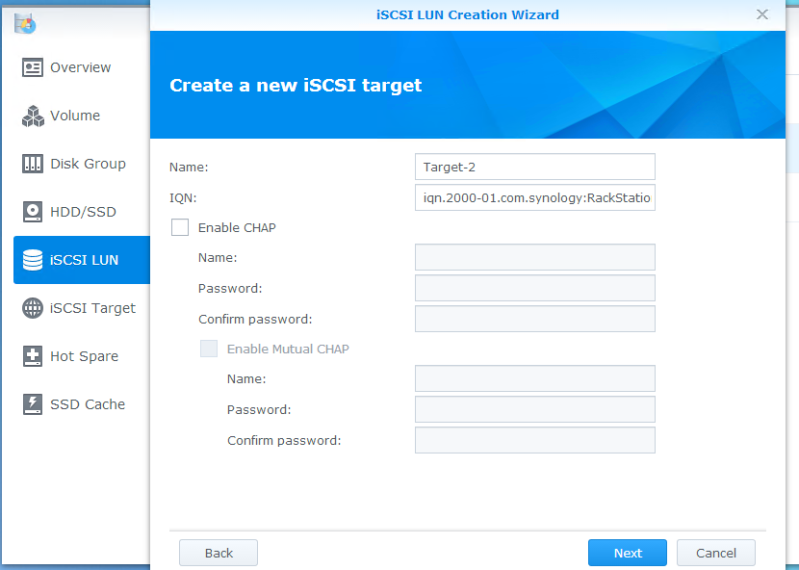

In this example, I chose not to enable CHAP.

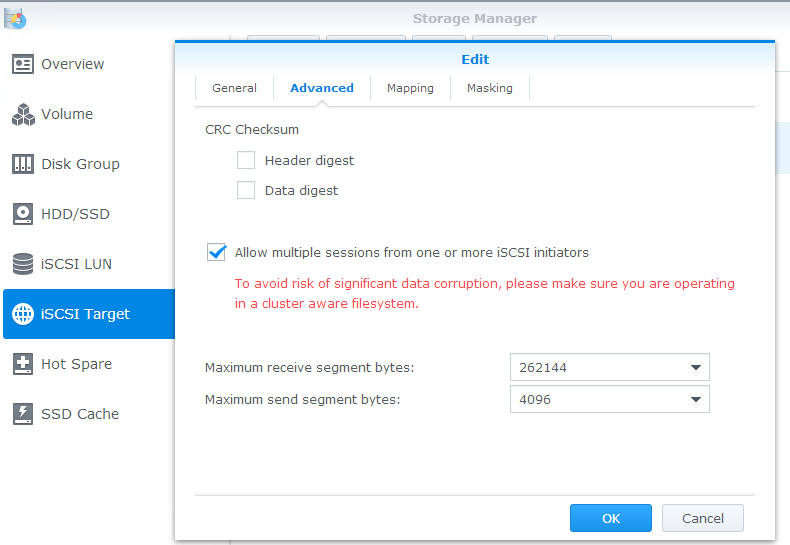

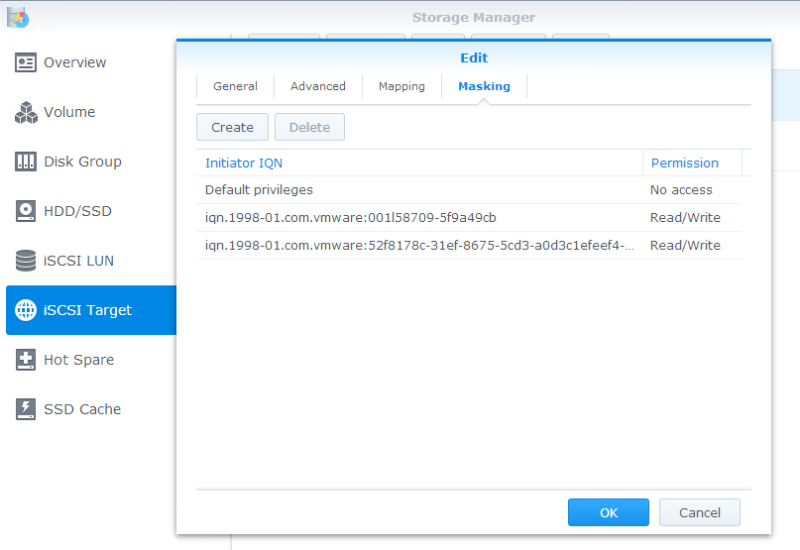

Next, click on iSCSI Target and edit the target you created in the last step. It’s critical that you enable “Allow multiple sessions…” here since we’ll have several network cards targeting this LUN. You may also wish to set up masking, as well. In the second picture, I’ve blocked “Default privileges”, and then added the iSCSI initiator names for my two hosts. This will prevent stray connections from unwanted hosts (or attackers).

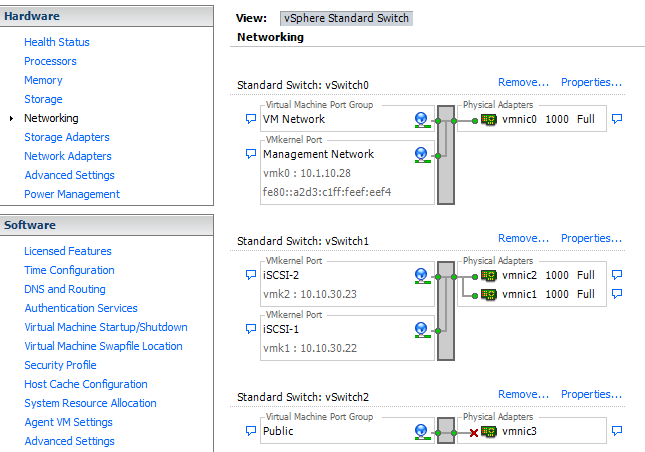

Now, let’s jump over to one of our hosts and set up the networking and iSCSI software adapter. You’ll need to do this on each host in your cluster. For this exercise, we’re using the VMware Infrastructure Client because I haven’t completed the build yet, so I don’t have a vCenter Server to connect a web browser to.

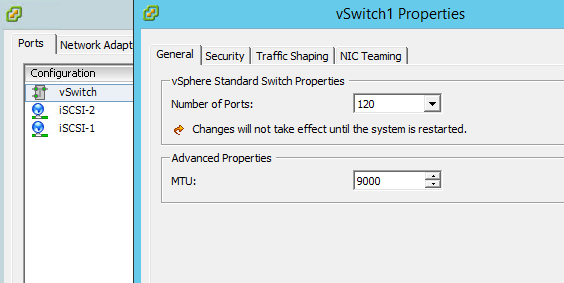

After you connect to your host, set up your storage networking. In this example, we’re using two of our four ethernet ports for iSCSI storage. Create a vswitch and two vmkernels, each with their own IP address on the storage network as shown. You’ll also want to set your MTU here as well if you’re using jumbo frames.

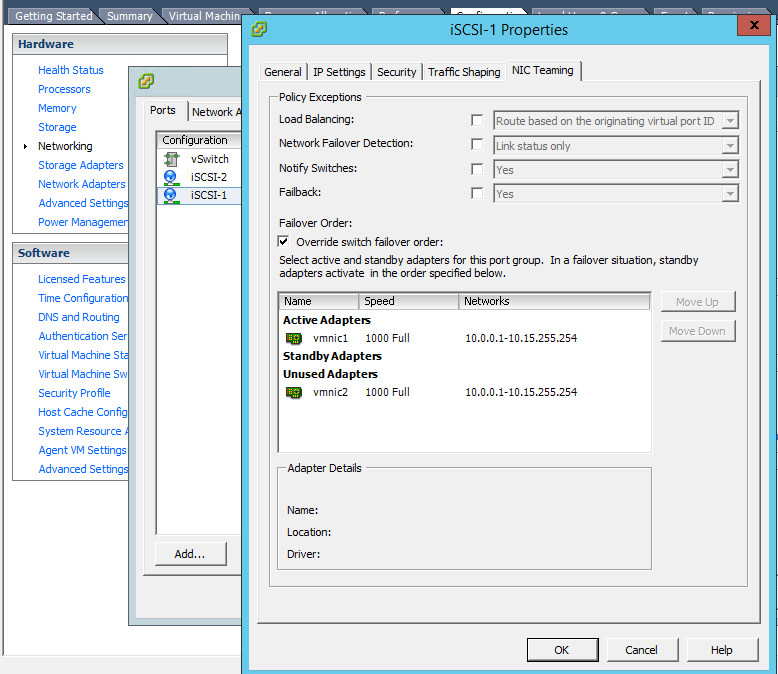

On each vmkernel, edit the NIC Teaming settings and override the switch failover order. Your adapters should have opposite configurations. As shown in this example, iSCSI-1 has vmnic1 “Active” and vmnic2 “Unused”, while iSCSI-2 has the reverse (vmnic2 “Active” and vmnic1 “Unused”). Essentially, this binds each vmkernel to a specific LAN port. Later on, we’ll teach the host how and when to utilize the ports.

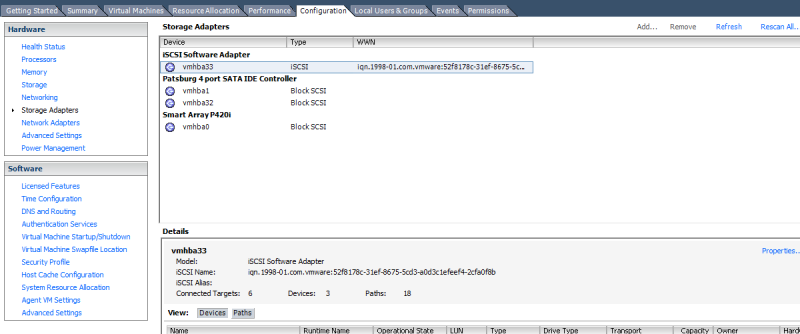

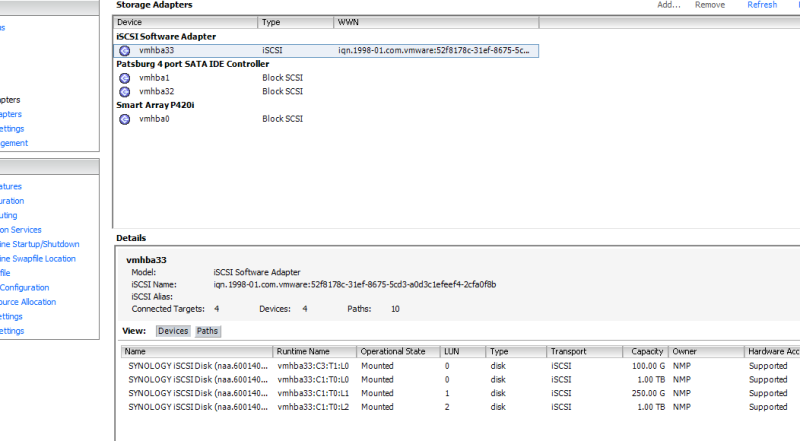

Next, click on Configuration and then Storage Adapters. Add your software HBA by clicking Add. You’ll notice I’ve already done this step.

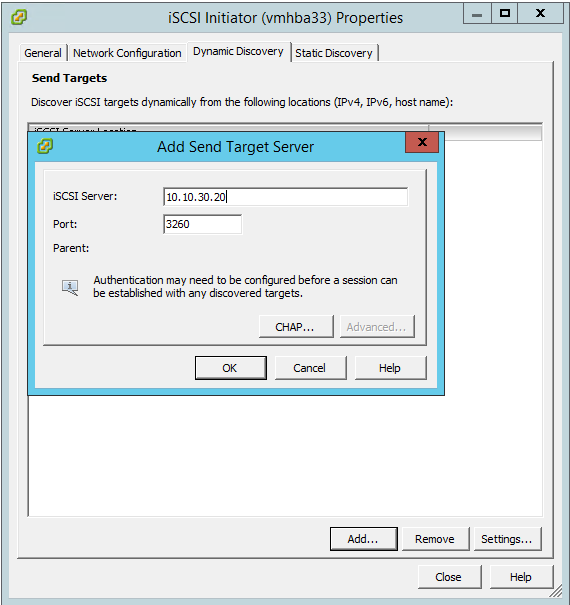

Highlight the iSCSI Software Adapter you just created and click on Properties. If you click on Configure (on the General tab), you can get the iSCSI initiator name to put in the Masking section we talked about a little while ago. If you use CHAP, you’ll also want to configure it here (globally) or on the individual targets. Click on the Dynamic Discovery tab and click Add. Here, you’ll put in the IP address of one of the iSCSI ports we set up on the NAS. You only have to put in one, but you may want to put in two for redundancy. Any remaining ethernet ports will be discovered automatically and will appear on the Static Discovery tab.

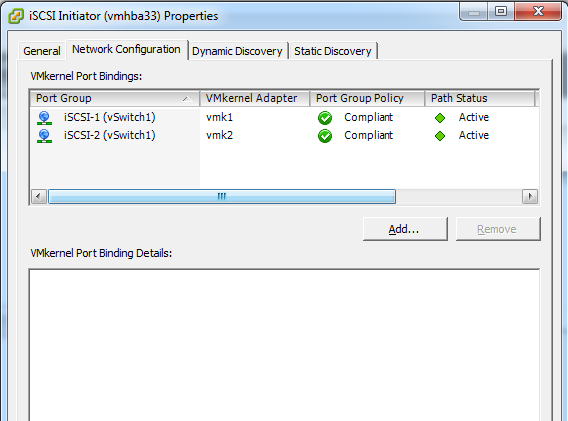

Now, click on the Network Configuration tab. Click on Add and individually add the two iSCSI vmkernel ports. In the screenshot, you’ll see that the path status for both physical links is “Active”. This is our Active/Active multipathing.

Now, go to Storage and rescan your HBA’s. Once the rescan is complete, you can click on Add Storage to add your new LUN as normal. You’ll see that the Hardware Acceleration state of your LUNs is “Supported”. That means all VAAI primitives are functional.

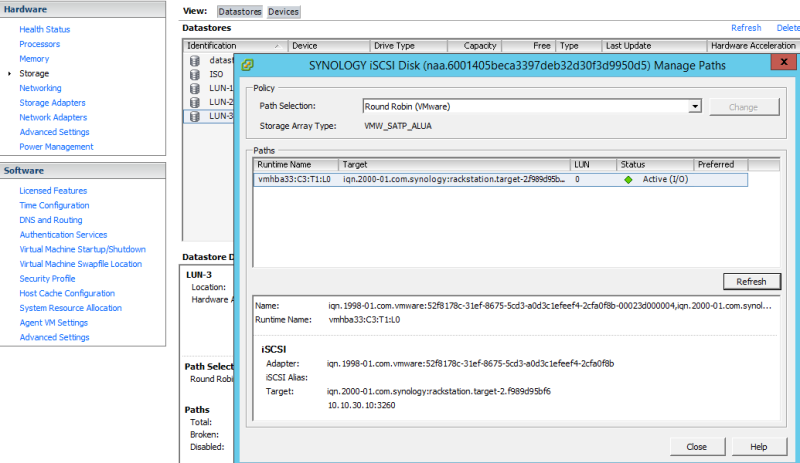

The very last thing we need to do is to enable Round Robin on our LUN. You’ll need to do this for each LUN on each host but it will cause your iSCSI network cards to balance the traffic instead of loading it all onto a single port. Alternatively, you can set the default pathing profile for the plugin and then reboot the server, which will cause it to affect all LUNs using that storage plugin. To set it globally, SSH into your host and execute:

# esxcli storage nmp satp set -s VMW_SATP_ALUA -P VMW_PSP_RR

Otherwise, to set it per-LUN, go back to Storage, highlight your LUN, and click on Manage Paths. Change your Path Selection to Round Robin and click Change.

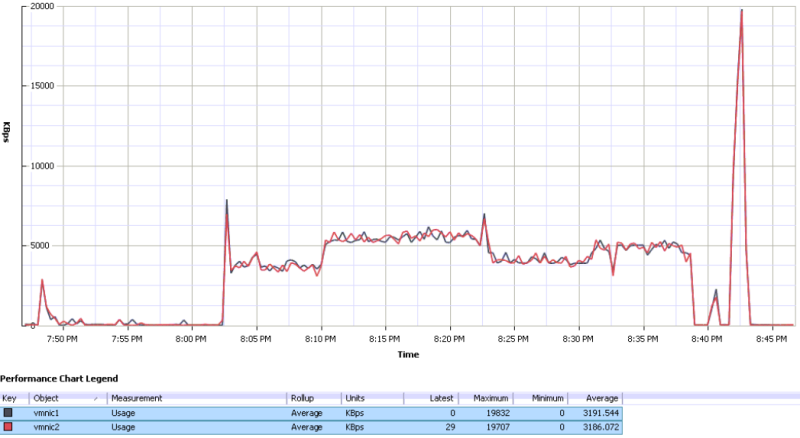

Here, you can see a utilization chart with traffic being balanced across both vmnics to the storage array. Remember, it’s using Round Robin, so it’s not true load balancing, but as you can see it does a pretty good job of using both links. I highlighted the adapters so that both lines would show up more clearly.

That’s it! You now have Active/Active Multi-Path I/O configured to your Synology NAS with full hardware acceleration enabled! In another post, I will show the performance and throughput characteristics of the environment we built so that you can see how the traffic looks. Be sure to Like and reblog this if you found it helpful. C’mon, people, I need the traffic!

36 thoughts on “How to set up VMware ESXi, a Synology iSCSI NAS, and Active/Active MPIO”

Comments are closed.

Reblogged this on Peer2Peer Consulting.

Very nice!

Very informative post. Question, when I go to the Storage Adapters and add the IP address to the Dynamic Discovery it adds it after a long wait. However, the rescan doesn’t show anything new, and I can’t add the storage device. Both the hosts and the synology are on the same subnet/LAN. Any ideas?

Are you sure you’re adding the right address? Also, did you set up any iSCSI masking or firewalling on the Synology? It sounds like it’s rejecting the connection.

I think I figured it out. The ones that set this up, plugged the iscsi ports into a separate dell switch. Unless the synology is plugged into that switch, it won’t see it. At least I hope that’s it. Thanks again for this great tutorial. At least it confirmed I had everything setup right. I made one modification, and that was to use block level, as it was mentioned it’s quicker than file level for virtual environments. Thanks!

Glad it’s working for you! They should not need to be on the same switch, so I would be taking a very close look at the networking architecture. Maybe it’s not trunking VLANs properly. The whole point of iSCSI/NFS is that they can run over standard TCP/IP without even being in the same broadcast domain.

As for block vs. file, it really depends on the capabilities of your shared storage. Some vendors excel at one over the other. For the Synology, unless something has changed recently, you won’t get VAAI support unless you use File. You can still create your LUNs and your hosts will still see it, but VAAI will be disabled. I mentioned that in the post. You can see whether VAAI is enabled in this screen: https://frankstechsupport.files.wordpress.com/2014/07/vaai.png?w=800&h=440

Over on the right, under Hardware Acceleration, you’ll see “Supported” or “Unsupported”.

It’s the way they set it up. The switch used for iscsi is not connected to the LAN. So, it’s a physical separation, not a VLAN or anything like that. I’ll probably be changing it, but that’s how it is now. Nice huh? Thanks for the info. I’ll read up more on VAAI.

Hi,

it looks like your average transfer speed is 30mb/s per nic.

we are experiencing the same issue. If we create a 1GB file on a drive on that lun using the same setup as you described here, the top write speed we get is 90MB across 3 nics (abot 27 to 30mb/s each).

If we don’t use RR and just use a single nic, we get 115mb/s.

You said you were getting the full bandwidth of all the connections. Can you possibly post a benchmark of it using the synology default connection type. And a benchmark of it with the RR?

I spent about 6 weeks testing different settings, and customising the esxi settings, and only managed to get minimal improvement but never as fast as the single connection was.

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1002598

Wow, that’s really interesting. Unfortunately, this system is in full production with the customer now, so I can’t rerun the benchmarks. Also, I didn’t do rigorous testing on the benchmarks when I wrote the post, so I can’t confirm your findings. Please let me know if you get more information and I’m definitely going to see if I can lab this up if I can scare up a test Synology.

Thanks for your good write-up 🙂

I have a SOHO setup with VMWare with two hosts. I just got a DS1815+ with 6 x 3TB WD Red’s. I’m trying out using the Synology as a datastore to run a couple of the VM’s. Currently the hosts all have local SLOW HDD’s where the VM’s run from. Performance is pretty bad as expected.

I have several switches, one of them a 3COM 4200G which can do Link Agregation. I’ve tested it and the DS1815+ sucessfully creates a bond (tried 3 ports). I was wondering if that would be better than using the multi path method you’ve used here?

I too have 4 x 1Gb ports in each of my hosts, so setting them up similar to what you’ve done would be good.

Thanks 🙂

I don’t believe that you would see better performance. If this is still in setup or lab, might be a good thing to test and write back.

After a bit more reading I think I’ll remove the LAG and try MPIO as you’ve done here. If you were to use a physical switch that has public traffic and iSCSI would you separate then into VLANs – any disadvantage doing that?

I would absolutely separate the traffic. iSCSI traffic should be isolated. You don’t want any extraneous traffic impacting your storage traffic, and you don’t want to create a security issue by having the storage vlan accessible from your clients. Ideally, I’d put the iSCSI traffic onto its own switch, not just its own vlan, but for smaller companies with a tight budget, that’s not always possible.

the LAG won’t help your bandwidth for iSCSI or for NFS as it doesn’t split connection streams between the ports. So your max speed through a lag will be the max speed of a single port, so on a 1GB switch, it will run at 1GB.

MPIO is so far not optimised for speed with the Synology units. If you have 4 iSCSI connections, all on separate switches, all on different subnets, all on different vlans (which is the ideal scenario) and you allow multiple connections on your LUNS, and you configure round robin correctly on the host.

Your speeds wont be 4x 1GB connections, it will be more like 1.1x 1GB speed for bandwidth.

We use NFS now because even after contacting synology in taiwan about getting the MPIO round robin working correctly, they weren’t able to get the multiple links aggregating the bandwidth using round robin.

They blamed the way the network driver worked on the synology.

NFS gives us around 15% more IOps over the iscsi connection, and it also uses the whle amount of bandwidth. around 120MBs.

Maybe one day the Synos will allow for a high performance round robin. 😦

Thanks Jamie,

That’s a bummer then! Are you just using single nic’s in your hosts for the NFS traffic? I did briefly come across a VAAI enabling driver for vmware for NFS, although I’ve not explored that yet. Have you seen that or used it?

Was your work with Synology support recent – would you be aware if they’ve made or make improvements to the networking drivers in the future I.e. Are you still pursuing this or have you just given up and just gone the NFS route?

Cheers.

Frank,

Can I ask how did you setup your default gateway(s) when you created your iSCSI VMKernel ports? I’ve done very similar to what you have but my iSCSI traffic seems to be routed through the management network and not through the iSCSI nic’s.

My configuration is:

vSwitch0

Management Network 192.168.0.7

VM Network VM’s on 192.168.0.x

Subnet mask 255.255.0.0

Default gateway 192.168.0.1

vSwitch1

iSCSI-1 10.10.30.20

iSCSI-1 10.10.30.21

iSCSI-1 10.10.30.22

subnet mask 255.255.255.0

So when I create the iSCSI VMKernel ports, the default gateway is 192.168.0.1 – is that the problem – it routing through the public network?

Physically, I have a couple of 24 port switches on the public network and on one of them have connected all the iSCSI traffic using port VLAN to separate it. The management network is connected to the public network (maybe that’s wrong?)

Sorry for the questions – my limited understanding of VMWare networking principles probably doesn’t help!

Many thanks,

Paul.

Great Comprehensive article.

Keep it up!

Great article. Was able to setup Synology with ESXi host easily.

Thanks for you hard work in putting the together.

Thanks! I’m glad you found it helpful. I had…an interesting time…trying to get mine up and running originally, so I’m glad I could make it easy for the next guy.

Big thanks, just what I was looking for! I’m a newbie in the domain and I understood almost everything!

I have a few questions :

– Does the 2 switches have to be linked together ?

– Why is the management port of the hosts linked to the ISCSI switch (10.10.30) and not to the other one (10.10.10) ?

– Why does the vmk0 have the address 10.1.10.28 and not 10.10.10.28 ?

Thanks

– No, the two switches in this exercise are linked to permit management of the storage network. The specific network design will be dictated by your environment and requirements.

– It’s not. The management is on the “public” (non-storage) side of the network, on vSwitch0.

– Because it’s not on the storage network.

– OK

– On your whiteboard, I see 3 cables going to the “storage” switch. 2 for ISCSI and what’s the 3rd one ?

– 10.10.10.0/24 IP’s isn’t the storage network, is it ?

This is indeed a very good write-up. Unfortunately I am running into the same poor performance issues with the DS1815+ using iSCSI. I have been pulling my hair out and tweaking every way imaginable but I’m stuck with slow performance. This is extremely disappointing after paying over $1000 for home lab storage. I’m going to try NFS and the VAAI plugin next. Someone please post if you manage to get good performance out of Synology or if some future DSM release fixes this issue!

Hey Ken – I had similar poor performance issues until I got in touch with Synology Support and they explained where I messed up the config. What is your email? I wouldn’t mind reviewing what they found and helped me fix with you.

I was running at 1515+ with four nics active, and four nics for iscsi on ESXi Host.

I was getting 150 MBPS with MPIO before the fix, now I get 280mbps.

I can’t get it higher due to software iscsi overhead – but its a lot better then what it was before.

Hi Ricardo, can you send me some screen of the ESXi configuration ? I’m in exact same situation. 4 NICs for iSCSI on each host (a vSphere cluster ) and 4 NICs for iSCSI on a SHA RS3617xs. I got only 20-30Mo/s on each NICs when I check the DSM Monitor.

Thanks for this great write up. However, I’m having some difficulty I get all the way up to where I have to add the two vmkernel ports i created to the iSCSI initator. In your example it shows “Path Status” as “Active” on mine it keeps showing “not used” and I have no clue why. I was able to add the two vmkernels with two diferent IPs (I used the same IPs in your example), but for some reason one of my vmnics that is associated with that vswitch isn’t seemingly getting any data it just shows under “network connection” as none. My other NIC (vmnic1) is showing the discoverable network to be 10.10.30.10 – 10.10.30.11. these are the two IPs I am using on the synology on two diferent interfaces.

I am using a X520-DA2 SFP+ 10Gbe card in server and I am direct attaching to my synology using SFP+ twinax cables. The X520-DA2 has two ports and it seems to be seen as two seperate NICs with two seperate MAC addresses. The card also says in it’s specs that it supports MPIO as well as LACP. This would lead me to believe that I could team both NICs on the same card.

Hello! Thanks a lot for your guide.

I have setup my infra following your guide. It was very helpful for me. Have on question regarding performance.

I also have setup Round-Robin for 2 NICs. But when I check performance graph I see that only one NIC has traffic on it, another NIC has no traffic. I have double checked all settings once again. Everything is OK.

1. Do IP addresses of each iSCSI NICs should be in a different subnets?

2. Do I need to configure SYNOLOGY to run “Round-Robin” ?

3. When I check vSwitch settings –> Ports –> iSCSI ports have different IPs; But when check vSwitch settings –> Network Adapters –> vmnic0 and vmnic1 in “IP range” I see the same string = 192.168.1.10-192.168.1.10; Is it normal this is the reason? How can I fix it?

Thank you.

Thank you for writing up this article. It was extremely helpful as a reference on setting up MPIO under VMware and ensuring my Synology NAS was configured properly.

Take care! 🙂

Hi Frank, happy to have come across your article. I have been working for a pharmaceutical company. In our remote offices we installed Double HP servers and EMC VNXe storage to create a remote office kit. I have a Synology RackStation installed at a client, in combination with 2 Dell diskless servers (VMware on flash). Looking at your whiteboard drawing, I’m confused about the 10.1.10.28 address. I see 10.10.10.x and 10.10.30.x. In the ESX configuration I see a 10.1.10.28 address which I initially thought was missing a ‘zero’. I saw that someone else asked about it as well. I honestly thought this had to be 10.10.10.28 to be reachable from the Public network. Do you mind elaborating on this so I understand the background ?

There is no 10.1.10.28 address in my whiteboard photo but maybe you’re seeing it as the Management Network address in the fat client config photo a few pages down? That address is not related to the configuration, but was only being used as a convenience. I was building this system as a new standalone network, but I needed temporary connectivity on my existing network. It’s been a few years since I built this, but I *believe* that my existing tech room build network (10.1.10.0/24) was almost identical to the network into which this was ultimately being installed (10.10.10.0/24), so I kept the same addresses on the Public and Management sides except for the second octet. For the purposes of this tutorial, though, the “Public” and “Management” networks are completely irrelevant and can be whatever your network requires. The Storage side (the 10.10.30.0/24 network) is really all you need to focus on, and obviously those addresses will be different for you anyway.

Hi Frank,

It was indeed in one of the vSphere client screenshots – it looked that much similar to your ranges on the whiteboard that I was wondering.

I made some changes to our setup today, based on your article as I had some strange behavior from VM’s that I could not put my finger on.

Thanks for your feedback – I appreciate it !

Awesome blog, I was just looking to do the exact same thing, so this helped fill in some blanks. I realize this is over 2 years old, so I hope you are still answering questions as I have a few.

#1 You mentioned joining the storage network with the normal network, so you could access it administratively. I also noticed though that you used 3 nics for the storage network, and one back to the normal network for admin. Would you have had to physically connect those 3 nics back to the normal network since you had the one admin interface?

#2 Does Synology allow you to segment storage traffic to a certain interface, and admin traffic to another interface?

#3 Synology allows for bonding nics. Why did you decide to do 3 separate IP addresses for the Synology nics vs bonding the nics as one cluster adddress?

1) I’m not sure I understand your question. The Synology has 4 physical NICs. In this exercise, I have three of them physically wired to the storage-side switch for iSCSI only and one wired to the administrative network switch. Your specific networking configuration will vary depending on your architecture.

2) I don’t believe that you can specify which NIC will be used for iSCSI traffic on the Synology. It should be based on IP address and iSCSI initiator node names. However, Synology does support VLAN tagging, so you can probably segment traffic that way instead.

3) Because if you bond all the NICs, you get redundancy but not load balancing. On the VMware side, for example, all traffic flows will be dumped onto the first NIC in the bond and the remaining NIC(s) will be all but ignored. By breaking it down into separate paths, we get a sort of poor-man’s load balance across all participating NICs. That said, you COULD do it. It will technically work, but that design fulfills a different architecture requirement.

Thanks for getting back to me. I should have been more clear.

1) In one of your comments you said, “– No, the two switches in this exercise are linked to permit management of the storage network. The specific network design will be dictated by your environment and requirements.” I was wondering why that was needed when you had 1 of the 4 Synology nics already on the administrative subnet.

2) Can’t wait to try it, thanks.

3) Ugh, I didn’t know that as the Synology is still in the mail. Watching this video here https://www.youtube.com/watch?v=ZVFQ5FFaDe4 at 4:28 it looked like Synology had two options, IEEE 802.3ad Dynamic Link Aggregation and Network Fault Tolerance only. The Dynamic Link Aggregation looked to me like load balancing, but am I misunderstanding it?

Thanks for getting back to me. I should have been more clear.

1) In one of your comments you said, “– No, the two switches in this exercise are linked to permit management of the storage network. The specific network design will be dictated by your environment and requirements.” I was wondering why that was needed when you had 1 of the 4 Synology nics already on the administrative subnet.

2) Can’t wait to try it, thanks.

3) Ugh, I didn’t know that as the Synology is still in the mail. Watching this video here https://www.youtube.com/watch?v=ZVFQ5FFaDe4 at 4:28 it looked like Synology had two options, IEEE 802.3ad Dynamic Link Aggregation and Network Fault Tolerance only. The Dynamic Link Aggregation looked to me like load balancing, but am I misunderstanding it?